|

A book by William H. Calvin UNIVERSITY OF WASHINGTON SEATTLE, WASHINGTON 98195-1800 USA |

|

THE CEREBRAL CODE Thinking a Thought in the Mosaics of the Mind Available from MIT Press and amazon.com. copyright ©1996 by William H. Calvin |

|

Resonating with Your Chaotic Memories

Environment is that fifth essential for a full-fledged darwinian process. Environments aren’t just one thing, though we often speak of them as having one key feature that dominates all others; for example, as if it were simple efficiency at cracking open difficult seeds that makes the difference between the less successful and the more successful finches in the Galapagos, at least during a drought. But typical environments are multifaceted — mental environments ought to be particularly variegated — and they fluctuate widely over a lifetime. From the standpoint of a darwinian process operating in neocortex, the environment ought to include such things as physiological state, sensory inputs from the outside environment, and memories of earlier ones from an inner environment. In each cortical area, physiological state ought to include the relative ratios of those four major neuromodulators (acetylcholine, serotonin, norepinephrine, and dopamine) arriving from subcortical sites, plus all the peptide neuromodulators. Also, preparedness for action (those holds on movements that might be arriving from prefrontal cortex, those agendas being monitored from orbital frontal) creates behavioral “sets.” Thalamus is particularly influential at “activating the EEG” in small regions of neocortex, surely an aspect of selective attention that forms part of the environment for a darwinian process. All of these factors will impinge on the ability of a cortical area to sustain a spatiotemporal pattern, to clone new territory, and to resurrect a spatiotemporal pattern that has fallen silent. A major part of our environment, however, is a memorized one, and the psychology of memory suggests that it is far more unreliable than is usually thought. Sensory inputs are, by the time they reach the areas of cortex involved with working memory, filtered by perceptual processes. Furthermore, the sensations are averaged over some time past. We don’t see the jittery visual field that our eye movements suggest we ought to be seeing; instead we see a stable model of that visual world, complete with some of the mistakes we call illusions and hallucinations. A memory recall, to a psychologist or neurophysiologist, is an even more artificial construction because it isn’t anchored by current sensory input. Recalls are created on the fly, sometimes differently on successive occasions, and are potentially tainted by injections from one’s imagination. At times, reality becomes suspect because the validity of your memories are called into question. Studies of eyewitnesses to staged mishaps have long suggested that even those eyewitnesses who initially recall the cars, people, and sequence of events correctly can, after a few weeks, get them scrambled up — and not realize it.

|

|

The memorized environment is surely the biggest problem we face as we try to make sense of

the setting for a darwinian shaping-up process. All the other contributors are potentially active

patterns of cell firings or their immediate aftermath. Environments memorized some time ago

surely involve passive spatial-only patterns. If a hexagon’s activity is the relevant spatiotemporal pattern, what is the corresponding spatial-only pattern that can recreate it? It is presumably the synaptic connectivity within the hexagon (in actuality, within a minimum of two adjacent hexagons but, most of the time, likely spanning dozens). In any one hexagon of cortex, we can distinguish between internally generated spatiotemporal patterns and imposed ones (such as those that are due to recruitment from neighboring hexagons). It’s the same distinction as in learning a new dance step, where traditional locomotion spatiotemporal patterns get in the way of copying the instructions from the caller. It’s not an either-or situation: each hexagon’s connectivity can either aid or hinder an imposed melody via resonance phenomena. Resonance is what makes Puget Sound (misnamed; it’s really a long bay) have a multistory tidal range, while the nearby Pacific Ocean seacoast has only about one-third the range — merely up to the first floor windows, as it were. The time that it takes seawater to slosh back and forth in the bay is similar to the tidal period, and so the amplitude builds up, much like gently pushing a child on a swing at the pendulum’s characteristic rate.

There isn’t a one-to-one mapping between spatial-only and spatiotemporal patterns within the nervous system in the manner of a phonograph recording or sheet music. A given long-term connectivity surely supports many distinct spatiotemporal patterns. In the spinal cord, for example, a given connectivity supports a half-dozen gaits of locomotion, each a distinct spatiotemporal pattern involving many muscles and the relative times of their activation. It is presumably the initial conditions that determine which pattern is elicited from the connectivity. One aspect of initial conditions is that ghostly blackboard, the fading facilitation of synapses that was caused by earlier activity in the hexagon. Another aspect is the pitter-patter of inputs from thalamus and elsewhere; inputs that are not strong enough to initiate impulses can nonetheless bias the hexagon’s resonances. There are also those neuromodulators impinging diffusely on neocortex from subcortical areas; likely the relative activity in these systems enhances or masks characteristic resonances. Somewhat more specific inputs from amygdala or thalamus may “shift attention” in a similar way.

|

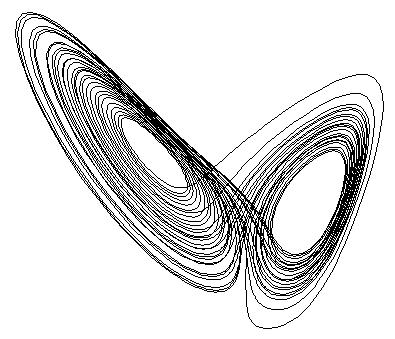

Chaotic attractors are a more general way of thinking about resonance. There’s a

fuller discussion of chaos in the Glossary, but the simplest attractors are the point attractors, such

as the rest state at the bottom of the pendulum’s damped swing. The clocklike limit-cycle

attractors are familiar from the relaxation oscillators that flush and refill reservoirs;

there’s one that

causes the neuron to fire rhythmically on occasion. Bursting cells may have a quasiperiodic

attractor but, in Walter

Freeman’s words, “if

a system flutters like a

butterfly, it may have a chaotic

attractor.”

cells may have a quasiperiodic

attractor but, in Walter

Freeman’s words, “if

a system flutters like a

butterfly, it may have a chaotic

attractor.”Chaotic behavior (in the mathematical sense of the word) looks random. The aperiodic appearance of the waking EEG is one example; physiological tremor is another. Chaos is a pattern difficult to predict because it isn’t recursive. But chaos, like mixing with a kitchen egg beater, isn’t noise; it is still produced deterministically by the underlying mechanisms.

In deep sleep, the EEG’s chaotic behavior is replaced by

limit-cycle rhythmicity; in Parkinsonism, the normal chaotic attractor of physiological tremor is

said to be replaced by fixed-point attractors (rigidity) and limit-cycle attractors

(rhythmic tremor). If there are two attractors mixed up, the system may loop

around one attractor and then switch to the other — and in a manner far more

complicated than the familiar example of the compound pendulum. The transition

between attractors is effectively a phase transition analogous to solid-liquid-gas

transitions. If we are comatose or under deep anesthesia, point attractors dominate and we experience rest or rigidity. The hierarchy of mental functions builds up from this lowest level, where very little is working, to the level of deep sleep characterized by slow waves, which are also characteristic of the late “clonic” part of seizures. Above that is a level of functioning such as stupor and dementia, where things aren’t working very well but at least aren’t stuck in the limit cycle of oscillations. As we switch from walking to jogging to running, we change in and out of the various attractors. When the Necker cube switches back and forth between top-down and bottom-up perspectives, it’s presumably because we’re switching in and out of lobes of an attractor.

|

Our more intelligent mental states are sometimes said to flirt around the “edge of chaos.” This term is from complexity theory, which envisages an adaptive system that ranges between a rigid order and a more flexible disorder, controlling the degree of permitted disorder. We may range from satisfaction at getting something right (convergent thinking) to blue-sky divergent thinking; in those more creative moments, some of our cortical systems may be poised near the edge of chaos. So, just to abstract the term even further from its everyday connotations, chaos is controlled disorder! “Capture” is another aspect of resonance/attractors that will be useful here: a spatiotemporal pattern that comes close to an attractor’s pattern will be altered to conform with that of the attractor. When you feel captured by that washboarded road, it’s because you really are being shoehorned into an attractor. This convergence is another way of saying that attractors have a basin of attraction, a wide set of starting conditions that all eventually lead into the same attractor cycle. Thus we have convergence to a quasi-reproducible pattern — and that enables a form of sensory generalization (as I’ll discuss in Act II, the classic example of generalization is getting a monkey to treat a large triangle the same as a small inverted triangle). Categorical perception is but one example of cognitive phenomena that could be effected by capture, as when we listen to a graded range of speech sounds progressing from /ba/ to /pa/. We hear them not as a changing series but as a monotonous repetition of /ba/ that, suddenly, switches to /pa/ repeating over and over (newborns don’t have this problem, lacking capture categories). The reason that many native Japanese speakers confuse the English /l/ and /r/ sounds (as when rice comes out as lice) is an in-between Japanese phoneme whose well-learned-in-infancy category captures both English phonemes. That means that the Japanese speaker cannot hear his mistake in English pronunciation (“But I did pronounce it exactly the way that you said!”).

|

|

After all this background on memory, and the loose analogies to chaos and complexity, we

can now consider how an unorganized cortical territory is influenced by resonances when a

spatiotemporal pattern arrives by lateral cloning. If the cloning pattern resonates with the

connectivity, annexation will be easier than if it doesn’t. Indeed, perhaps the new region

will adopt the rhythm so enthusiastically that the pattern will locally persist, even if the lateral

copying that ignited it were to be discontinued. On the other hand, were the cortex sufficiently excitable that all patterns reliably cloned laterally, resonances would not matter. The local maintenance of the rhythm, however, would then be totally dependent on lateral copying. It would be easily erased, in comparison to resonating regions that kept going when a supply route was temporarily interrupted. The copying-only case is like a group of novice dancers learning a new spatiotemporal pattern by following instructions from a caller (as in square dance); when the caller stops, so do they. But eventually, as the dancers develop their own internal resonances, they can generate the patterns on their own, sans caller. Locally, graded effects might be important for getting the rhythm started, much as piano keys can be struck softly or vigorously. Once into lateral cloning mode, however, the notes might be as stereotyped as those plucked notes on a harpsichord, more digital than analog — with copying from copies, you probably need a digital code, as shades of grays eventually go to all-or-nothing extremes (much as photocopying the previous photocopy of a photograph will progress to an image with a solarized black-and-white appearance). So our cerebral code is likely to be binary, even though calling it up from scratch may have important analog aspects. Note that we now have a serious reason for treating the hexagon as more than just a convenient group name for a collection of N points from N triangular arrays. Yes, extension of territory initially involves each triangular array recruiting a new node in the no-mans-land — but resonances mean that some sequences fit into a locally favored melody, and some don’t. The favored melody is a property of the hexagon’s connectivity, as manifested by the attractors.

|

|

Memorizing a spatiotemporal pattern would seem to be a matter of creating a new

connectivity, probably in a number of hexagons. Although the usual LTP-to-structural-enhancement model of learning (some synapses are enlarged in the days following LTP) seems to suffice

here, remember that we are superimposing some connectivity changes upon a pre-existing

cortical connectivity, one that already has some attractors inherent in it. Tacking on yet another attractor may not always be possible. One reason is that strong local attractors can likely capture the new pattern once lateral copying is discontinued, altering the pattern to the previously stored one, and so the new one is never memorized. But don’t forget the spatial aspect: a successful new spatiotemporal pattern has probably temporarily occupied dozens to hundreds of hexagons. Although some hexagons may capture the new pattern once active cloning discontinues, others may be able to accommodate the permanent connectivity change that would make later recall of the new spatiotemporal pattern possible. This redundancy accords well with Lashley’s notion that memory traces are widespread, while still allowing for some expert areas. While the connectivity change, when successfully made, might eliminate one of the weaker attractors of the pre-existing collection, as I will discuss in chapter 9, a locally lost basin of attraction probably has stronger versions elsewhere. Once cortical “slots” start to fill up, finding a suitable niche for adding a new attractor may require hexagonal mosaics that temporarily annex a lot of cortex (you might need to pay a lot of attention to a new event, in order for it to be recorded). This also means that the new attractor may be scattered, evocable from isolated pockets here and there (one of the surprises of early chaos theory was that basins of attraction could be parcellated), but the scattered nature might make it more difficult to get a critical mass going during recall attempts. Memory recall is now easier to imagine with such attractor mosaics: the revival of the ingrained spatiotemporal pattern could start from any place in the original annexation territory and, once re-ignited, spread by lateral cloning to annex a somewhat different territory. This is a nice feature because recall need not begin from the very feature detectors (perhaps scattered over some millimeters) that started everything, back during acquisition, before synchronous recruitment extended some triangular arrays. The cloning might even spread back into the original feature detectors, but I can see no reason why this would be necessary for either percepts or concepts. The criterion for successful output may simply be enough active clones of a spatiotemporal pattern to instruct the motor pathways, not activity in a particular neuron set. As an aside, note that neocortical memory capacity might well be limited. The price of memorizing a new phone number, well enough to recall it tomorrow, might be eliminating a resonance for some other memory — say, the name of your first-grade teacher. Usually, there would be other attractors for that name elsewhere, but eventually you might eliminate the last one because the new attractor’s connectivity change will produce a connectivity pattern in those hexagons which can no longer resurrect that characteristic pattern. The remaining connectivity might still resonate with imposed patterns, so you might continue to recognize the name when you heard it, but recall per se will stop working. The age at which this gain-one-lose-another state of affairs begins is an empirical question. Nothing in the hexagons theory suggests whether it should occur at age 10 or age 80, but the theory does suggest an experimental strategy of looking for a developing gap between recognition and recall of long-term memories.

|

|

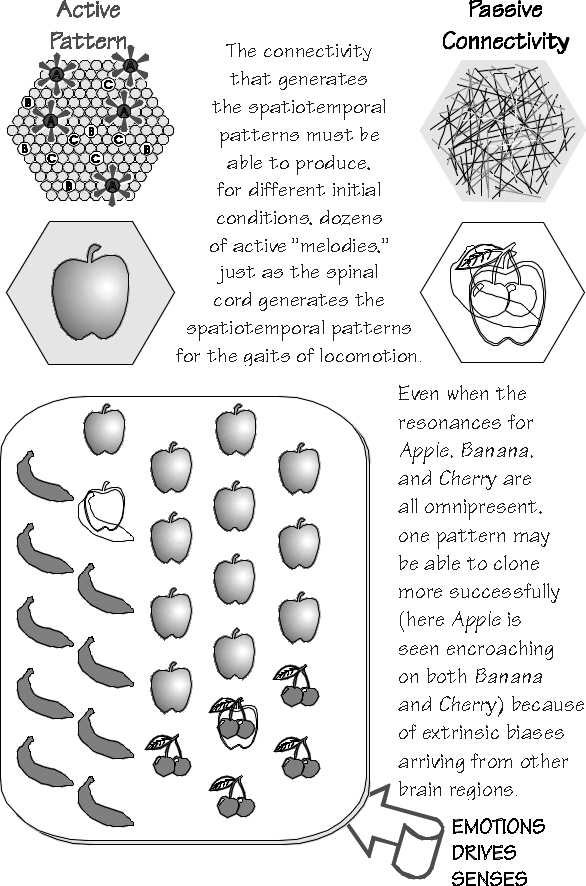

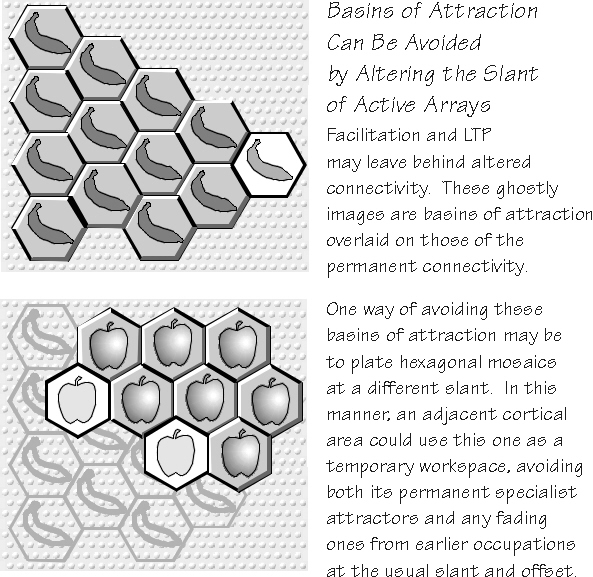

A simple sensory decision now looks rather like that hand movement decision earlier. The

cortex would have some resonances for Banana and Apple and

Cherry; there would be hexagons

that resonated to all three, just

as the spinal cord circuitry

supports multiple gaits of locomotion. Some hexagons might

resonate to certain patterns

better than others. Which succeeds in cloning the largest territory, however, will surely depend on both permanent basins of attraction and some temporary ones. For example, hexagons from which the Apple spatiotemporal pattern has retreated will likely have some lingering aftereffects; perhaps facilitation and LTP will leave the connectivity in a state more likely to support the reestablishment of Apple. Such passive patterns are superimposed on the hexagon’s permanent connectivity; they might make it easier (or more difficult) for them to be activated (psychologists love to talk about proactive and retroactive inhibition of memories). One can also imagine ways to avoid a resonance, simply by using a triangular array on a different slant in the cortex. The very same territory might be associated with other rhythms operating through triangular arrays on some other slant; they, too, could have anchored hexagons in this “alternate universe.” This has interesting implications for the multifunctionality of neocortical areas, the conflict between Lashley’s equipotentiality and the neurologists’ specialized anomias. In particular, one could avoid specialist attractors by using other slants and so achieve a temporary work space.

|

|

Many memory recall phenomena seem dependent on recreating a physiological state similar

to that at initial presentation, as when things learned under the influence of alcohol are more

easily recalled after a few drinks. Some of this might be explained by staging attractors, and

some by finding the right slant for the array.

Much of the foregoing could be said for schemes other than triangular array extension and the consequent hexagonal mosaics. As Peter Getting said about motor systems, “input may not only activate a network but may configure it into an appropriate mode to process that input.” The entrainment of the triangular arrays, however, has some specific predictions to make about what gets memorized and what doesn’t. As mentioned earlier (p. 32), the NMDA types of postsynaptic receptors for the excitatory neurotransmitter glutamate are especially common in the superficial layers. The connections between our recurrently exciting superficial pyramidal neurons surely utilize NMDAlike properties. What features might NMDA augment? The NMDA receptor for glutamate also requires the presence of some glycine in order to open up its channel through the neuron’s membrane. (Another “duality” is that, in the spinal cord, glycine is an inhibitory neurotransmitter). The channel associated with the NMDA receptor seems to allow both sodium and calcium ions into the neuron. But its most singular “dual” property is that, unlike conventional synaptic channels, the NMDA ion channel is controlled by the binding of the neurotransmitter and by the pre-existing voltage across that membrane. It seems that magnesium ions tend to get stuck in the channel, effectively blocking it, so that even if glutamate binds to the NMDA receptor, no current flows through the ion channel to contribute to an excitatory postsynaptic potential. An EPSP may nonetheless be seen, of course, because non-NMDA channels are also activated by the glutamate, allowing sodium ions in, but the EPSP will not be as large as otherwise. Blocked NMDA channels represent a reserve; there’s extra responsiveness available for special occasions requiring emphasis. Reducing the voltage gradient across the membrane (depolarization), however, tends to pop loose the trapped Mg++. In consequence, the next glutamate that binds to the NMDA receptor will gate a flow of sodium and calcium ions into the parent channel and so raise the internal voltage of the dendrite to make a larger EPSP. The functional consequence of this channel clearing is that the dendrite becomes much more sensitive to the history of arrivals. For example, two impulses arriving a few milliseconds apart sum together nonlinearly. The second hump may be substantially larger than otherwise, thanks to the NMDA channels cleared by the first EPSP. In LTP experiments, a long train of impulses in a given pathway is produced, likely clearing out most of the blocked NMDA channels. The presynaptic terminal also becomes more likely to release glutamate when the next impulse arrives, thanks to some retrograde transmitter from the NMDA postsynaptic response feeding back to the presynaptic terminal. But an EPSP arriving in another part of the dendrite may be nearly as effective at augmenting the NMDA channel’s EPSP; it’s the voltage change produced at the NMDA channel’s location that is important, not whether that particular channel contributed to the voltage production. This means that near-synchronous inputs (say, within about 10 msec of one another) are far more effective than would otherwise be the case, and that postsynaptic impulses retrogradely invading the dendrites should also unblock some NMDA channels (providing yet another mechanism for “Hebbian synapses”). Absolute synchrony isn’t required, as it is simply a matter of how long it takes for another Mg++ to get trapped in the ion channel (often tens to hundreds of msec). Well-focused triangular nodes would benefit from a narrower window that could detect conduction-time coincidences (though surround inhibition is also available to shrink and focus the hot spot, once it develops). Repeated “synchrony” of two inputs, as predicted for the triangular arrays, might be the closest natural situation to the LTP experiments that produce enhancements in synaptic strength of a few hundred percent. Add to this the widespread suspicion that long-lasting changes in synaptic strength are built on a scaffolding provided by LTP changes, and you have one plausible recipe for triangular arrays embedding a new pattern in the connectivity, there to linger for a lifetime. Although triangular array extensions can presumably occur without NMDA receptors, it seems clear that the NMDA properties could provide a lot of enhancement to recurrent excitation’s tendencies to entrain neurons. And NMDA isn’t the only enhancer: the apical dendrite (the tall stalk that rises from the cell body toward to cortical surface before branching; see p. 26) seems to have many voltage-sensitive ion channels, including the persistent sodium channels and calcium channels. They allow antecedent depolarizations, in effect, to amplify the currents produced by a subsequent synaptic input. Indeed, more than half the 2X amplification seen at the cell body, from mimicking glutamate synaptic activation a half mm up the apical dendrite, seems to be from such mechanisms, with NMDA-dependent mechanisms under the synapse contributing a similar amount to the overall amplification.

|

|

Automatic gain controls (AGCs) have become familiar from the way that some tape recorders

automatically control their loudness. On replay, you hear the background hiss fade out, shortly

after someone begins talking, whereupon background voices also become fainter. That is

because the recording gain is automatically adjusted downward when, averaged over the last

second or two, there has been lots of input. AGC mechanisms per se have not yet been described in neocortex, though surround inhibition accomplishes somewhat the same thing, as does long-term depression. AGCs would seem useful for avoiding a fog of the characteristic patterns. With all the recurrent excitation in superficial layers of neocortex, there is a great need to keep the system from becoming regenerative. Voltage-sensitive potassium currents help to keep the lid on things, but that’s a mechanism limited to inside one neuron, not one that can influence neighbors as well, in the manner of one loud voice turning down the loudness of the background voices in that tape recording. Association cortex lacks a lot of the spontaneous firing that we see elsewhere in the central nervous system. Sustained firing is usually seen only in the major sensory receiving areas, in response to particularly effective stimuli, or in motor areas during preparation for movement. But while cortical neurons are individually capable of firing rhythmically to sustained synaptic inputs, they usually don’t. One survey shows that they’re suspiciously nonrhythmic, with intervals between impulses being much more random than we would have expected. Something such as a cortical AGC is presumably limiting the more vigorous rhythmic activity. From the NMDA properties, we can imagine how a simple, nonspecific AGC might work. After all, the extension of a triangular array contributes to a lot of nonspecific activation of cortical cells. Besides the hot spots where excitatory annuli overlap, there is a much stimulation of nonfocal areas by the other axon branches. Suppose that Mg++ was released by activity (or that some diffusing metabolite served to increase intracellular free Mg++ levels), so that there was more opportunity to block the NMDA channels that had been cleaned out. This would preferentially reduce the synaptic strengths in those paths. If the magnesium messenger diffused for macrocolumnar distances or the glia did similar work, it might well dampen the impulse activity in the rest of the region, leaving only the more optimally stimulated hot spots as the islands of activity that continue to maintain the raised “sea level.” Inhibitory mechanisms are the usual way of reducing the widespread anatomical funnel to the much smaller physiologically active area, but simple AGCs of this sort might easily help narrow the catchment zone for effective recurrent excitation. The necessity for a little glycine as a co-factor for the usual excitatory neurotransmitter at NMDA receptors would fit well with an AGC that spread a lot of glycine around as a neuromodulator: while suppressing excitability in general, it could conceivably augment NMDA synapses so that their neurons stood out. (Sea level might rise, but the hilltops still above water might be stimulated to grow!) Contrast is everything.

|

|

We need to abandon the string quartet at this juncture. If my digital-analog analysis is

correct, each of the members of that little choral cluster is really a one-note specialist. You will

probably have some difficulty imagining a specialized soprano that can only sing a high C, and

nothing else. Because the hexagon has on the order of a hundred minicolumns, however, we

can refine our musical analogy to a harpsichord keyboard; each singer is really only one key,

either sounding briefly or keeping quiet. You get to map the hundred minicolumns to your

musical synthesizer keyboard in whatever way sounds most pleasing to your ears, as each has

no inherent tonal quality. My analogy is simply a way of translating one pattern into a more

familiar one, just as we translate high-pitched dolphin vocalizations into our own auditory

range in order to aid our efforts at detecting patterns in the performance. Theory cannot yet provide much guidance on some critical questions: How many attractors can a hexagon’s worth of connectivity support before filling up? How easy is it to overlay another basin of attraction on the existing ones? Or to dislodge an existing attractor? How might “subliminal” inputs to a neocortical area (ones that do not themselves generate impulses, much less triangular arrays) bias the attractors and their approach basins? Note that the present theory does not aspire to the traditional goal of cortical circuit models, those transformations of sensory input that underlie perception. It aspires, rather, to the more abstract aspects of analogy needed for categories and creativity, able to generate new levels of sophisticated complexity. Nor does this theory have much to say about what determines the temporal aspect of spatiotemporal patterning — in the manner, say, of the coincidence cascades, postulated by Moshe Abeles, or the structuring role of the EEG “carriers” that may aid the widespread establishment of synchrony, emphasized by Peter König, Wolf Singer, and Andras Engel. My theory can, fortunately, say a lot more about some of the overall spatial dynamics, the possible neocortical equivalents of boom and bust.

|

Another classic example is the washboarded

road, whose bumps and ruts interact with the spatiotemporal bouncing

pattern of the moving vehicle. There may be some speed at which the

vehicle’s tires and springs resonate with the bumps. Increase or

decrease your speed by 30 percent and you will probably escape the

jarring ride — unless, of course, too many other drivers have tried the same trick and thus

created a secondary set of bumps for your new speed. The bumps are created, after all, by

bouncing vehicles. Connectivities are the bumps and ruts of the brain; indeed, they are created

in part by carving — removing pre-existing connections — and in part by building

up other connections.

Another classic example is the washboarded

road, whose bumps and ruts interact with the spatiotemporal bouncing

pattern of the moving vehicle. There may be some speed at which the

vehicle’s tires and springs resonate with the bumps. Increase or

decrease your speed by 30 percent and you will probably escape the

jarring ride — unless, of course, too many other drivers have tried the same trick and thus

created a secondary set of bumps for your new speed. The bumps are created, after all, by

bouncing vehicles. Connectivities are the bumps and ruts of the brain; indeed, they are created

in part by carving — removing pre-existing connections — and in part by building

up other connections. Figure-ground illusions provide an example of how an unchanging stimulus

pattern may give rise to several (sometimes alternating) perceptions, even if you fixate on a

single point.

Figure-ground illusions provide an example of how an unchanging stimulus

pattern may give rise to several (sometimes alternating) perceptions, even if you fixate on a

single point.

But such enhancement by

association is only one

instance of a much more

general phenomena: that of

setup actions (or, as the

more extreme versions are

known, reconfiguration

actions).

But such enhancement by

association is only one

instance of a much more

general phenomena: that of

setup actions (or, as the

more extreme versions are

known, reconfiguration

actions).