|

Conversations with Neil’s Brain The Neural Nature of Thought & Language Copyright 1994 by William H. Calvin and George A. Ojemann. You may download this for personal reading but may not redistribute or archive without permission (exception: teachers should feel free to print out a chapter and photocopy it for students). William H. Calvin, Ph.D., is a neurophysiologist on the faculty of the Department of Psychiatry and Behavioral Sciences, University of Washington. George A. Ojemann, M.D., is a neurosurgeon and neurophysiologist on the faculty of the Department of Neurological Surgery, University of Washington. |

|

Seeing the Brain Speak “Time for the picture show, I see,” Neil says from under the drapes. I glance up briefly from studying Neil’s brain to see that the neuropsychologist is indeed maneuvering the slide projector box around the anesthesiologist, positioning so that Neil can clearly see its back-projection screen. Neil’s conversational commentary on what he sees comes from the brain before me, so brightly illuminated and colorful. Nothing gray about it. The sounds may emerge from Neil’s mouth, but the words were chosen and sent on their way by something soft that lies just beneath Neil’s exposed brain surface — that very cerebral cortex I’m looking at. Somehow, it creates a “conductor” for the orchestra of nerve cells — a “voice” that talks to itself much of the time and only occasionally speaks out loud. A society of bees may create a beehive, but a society of nerve cells can create a person, one capable of pondering ethics, writing poetry, and performing neurosurgery. One that may be capable, someday, of understanding itself. Each time I gaze through this surgical window into a talking brain, I reflect that the view back to Earth would be anticlimactic, should anyone ever offer me a ride on a moon rocket. A person lives in that brain I am now seeing. Somehow the real Neil, an authentic voice, emerges from all those nerve cells. And narrates its life’s story. Somehow. I may marvel at it all, but George is probably busy thinking about exactly which parts of the brain are essential for language abilities. Not for patients in general, but for Neil — his version of all the possible variations on the basic plan. Neurosurgeons must avoid crippling language abilities. When epileptic areas are close to language areas — and often they are — it becomes very important to map language abilities before removing anything. We finish recording the electrical activity from the surface of the brain, obtaining more details than we’d seen in the pre-op EEGs. The characteristic signature of the idling epileptic process — those backfire-like spikes — are seen in Neil’s temporal lobe, and in regions that are often involved with language processes. George cannot simply remove those areas, because he would cause more trouble than he would cure. So the next question is, Exactly where does Neil’s language come from?  [FIGURE 14 Hemispheric asymmetries and the planum temporale] |

|

JUST LOOKING AT THE SURFACE of the brain, you can’t see any anatomy that is

peculiarly language cortex. Indeed, it is very hard to see the four “lobes” of each

half of the brain (frontal, parietal, occipital, and temporal). As Neil said when we discussed this earlier, “It’s hardly a four-leaf clover.” Just as there aren’t any agreed-upon boundaries in the United States between “the East” and “the Midwest,” so it is hard to say exactly where the parietal lobe stops and the temporal lobe begins. “At least,” Neil replied, “the right half is just the mirror image of the left.” But no — the two cerebral hemispheres are actually quite asymmetric in various ways. A hemisphere isn’t usually half, as the right side is often a bit wider than the left side. The right side protrudes in the front, slightly beyond the tip of the left frontal lobe; conversely, in the rear, the left occipital lobe protrudes beyond the right. “So the average brain is skewed?” Yes, and that’s not all. The sylvian fissure, the great infolded cleft that separates the temporal lobe from the rest of the cerebral hemisphere, is usually long and straight on the left side. On the right side, it is shorter and curls upward more noticeably. This reflects a right-left difference in the size of several brain areas. One of these is the “planum temporale,” the part of the temporal lobe buried in the sylvian fissure, extending from the auditory area to the back end of the fissure. Functionally, the two halves of the human brain aren’t symmetric either. Some brain functions are “lateralized,” especially language, which usually resides in the left side of the brain. Several decades ago, the neurologist Norman Geschwind observed that the planum temporale was larger on the right side in some people — and in about the same percentage of the population as had right-brain dominance for language. He inferred from this that the relative size of this area was an “anatomic marker” for lateralization of language, although the actual function of this area is not known. “Do the apes have that asymmetry?” Neil asked. It is present in orangutans and chimpanzees, suggesting that at least one anatomic substrate for language appears earlier in evolution. But it is not present in gorillas — which evolved midway between orangs and chimps — and gorillas can be taught a simple gestural language. So it isn’t simple. “Well, then, when does the fetus first start getting asymmetric?” The planum temporale asymmetry can be identified in fetuses of twenty-six weeks gestational age, the beginning of the third trimester. So babies are born with an anatomical specialization that is probably related to language. But the anatomy provides only this thin layer of clues about where language is located. Altered function provides many clues. When something goes wrong under the hood of a car, we may at last discover what some peculiar-looking part had been doing all along. The brakes stop working, and once we get around to looking under the hood rather than at the wheels, we discover fluid leaking from a part that a diagram in the owner’s manual mysteriously labels the “master cylinder.” Thanks to what stops working (the brakes), we can finally identify the function of that particular part of the clutter in the engine compartment. Greek philosopher-physicians of 2,500 years ago used similar reasoning when they recognized that the left brain seems to control the right side of the body while the right half of the brain controls the left side. “If the car’s engine compartment were organized along crossover lines,” Neil observed, “the left wheels’ power would come from the right side of the engine. And the left wheels’ brakes would come from a master cylinder on the right side. And vice versa.” Instead, modern cars get power to all wheels from the same engine that occupies both sides of the engine compartment. “And on most American cars, the brakes on both the right and left sides are controlled from a master cylinder on the left side of the engine compartment. Steering is similarly left-sided.” That arrangement is, you know, rather like language in the human brain. In 1861, the French surgeon Paul Broca said that, in his experience, it was usually damage to the left side of the brain that affected language, not right-sided damage. Such aphasia — as damage-induced disturbances in language abilities are collectively known — is distinguished from mere difficulties in speech itself. The “power” for speech obviously involves both sides of the chest and tongue and lips, but the mechanism that selects the words is on the left side of the brain, just as the steering and braking originate from the left side of the car. Broca called the left brain “dominant” for language. “Does that have anything to do with the right hand being, well, right-handed?” That kind of cerebral dominance was subsequently confused with that other specialty of left-brain function: running the right hand. It used to be thought that left-handed persons were the ones with right-brain language, that their brains were just mirror images in a few aspects, similar to the ways that cars in the United Kingdom or Japan differ from cars in Europe and the Americas. “But they’re not? They’re just all mixed up?” It now appears that most left-handers have language in the left brain, just like right-handers. About 5 percent of all people have language in the right brain and another 5 to 6 percent have significant language function in both halves. Although left-handers are found more often in the reversed-dominance and mixed-dominance groups, no pattern of hand use reliably predicts the side of the brain where the major language area resides. Broca also had an example of where language might live within the left brain. He had been caring for a stroke patient who seemed to understand much of what was said to him. Leborgne could follow directions and help care for some of the other patients in the hospital — but couldn’t get out any word except “tan.” His condition wasn’t explained by a paralysis of the relevant muscles, as Leborgne could eat and drink and say “tan-tan.” When Leborgne died, Broca examined his brain to see what had been damaged. The stroke turned out to have affected a region of the brain just above the left ear, including the lower rear portion of the frontal lobe, the lower front portion of the parietal lobe, and the upper part of the temporal lobe. Despite this varied damage involving three of the four lobes, Broca was most impressed with the frontal lobe damage because it extended deeper than elsewhere.  [FIGURE 15 Nineteenth century Broca-Wernicke concept of language cortex]

Broca proposed that the damaged lower rear portion of the frontal lobe was responsible for this

patient’s language problems. He suggested that this region controlled language output.

Neurologists soon came to speak of “Broca’s aphasia” or “expressive

aphasia” when encountering that characteristic language problem, and

“Broca’s area” to describe the lower rear portion of the frontal lobe on the

left side that is in front of the motor strip.  [FIGURE 16 Neil’s slide show, as naming sites are mapped] |

|

THE SLIDE SHOW is finally underway, with Neil naming the objects that pop up on the back-projection screen every few seconds. He’s well rehearsed at this task, and we know that

he can correctly name all of the slides. “I know what that is,” Neil says. “It’s a, ah, a....” George removes the handheld stimulator from Neil’s cortex. “An elephant,” Neil says at last, with some exasperation. Another slide pops up on the screen. “This is an apple,” Neil says routinely. George was nonetheless stimulating the cortex, but at another spot, a short distance away from the previous site. This new site seems unrelated to naming. The electric current has been set to confuse a small brain area, about the size of a pencil eraser. Stimulation causes Neil to make mistakes and is thought to work by inactivating or confusing that small part of the brain (or regions to which it strongly connects). When some sites are stimulated, Neil can’t name common objects that he ordinarily has no trouble naming immediately. George is searching for those sites. The slide projector keeps Neil busy for some time while George explores; we’re listening for any difficulties that Neil might have. Neil has been instructed to say the phrase “This is a...” before the name of the object. There are a few places, particularly in and around Broca’s area, where Neil cannot even utter the preamble: he cannot talk at all. But arrest of all speech can occur for many reasons and doesn’t truly define language cortex. Anomia, Neil’s inability to utter the name after successfully speaking the preamble, is closer to being a specific problem with language. Because all of us suffer from anomia on occasion (“Whatever is her name? It’s right on the tip of my tongue!”), naming difficulties are thought to be a mild momentary inefficiency in language processing in our brains — which is what makes it a good survey test for use in the operating room. The site where stimulation blocked “elephant” is not specific to elephants; stimulation later while showing other objects reveals problems in naming them as well. This seems to be a “naming site,” not an elephant site. Unlike computer memories that store things in pigeonholes, a memory such as “elephant” is stored in a distributed way throughout whole areas of the brain, overlapping with other memories in ways we do not yet understand.  [FIGURE 17 What Neil sees: The elephant slide] |

|

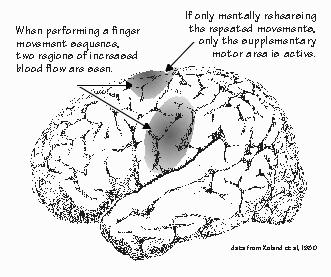

EVEN IF THERE AREN’T ELEPHANT SITES, I suppose that there might be places for

particular classes of words, such as nouns or verbs. Or, as Neil asked the other day, is there a

place for animals, another for vegetables, and another for minerals? Are there places where word phrases are constructed, and other places that decode what you hear for its meaning? Do bilingual persons have a different place for each language? Is language cortex organized differently in men and women, in the articulate and the tongue-tied, in the deaf using sign language? There are many possibilities, but only limited ways to get any answers. For brain functions other than language, most of what we know comes from studies of the brains of other animals. Mimicking speech sounds is not, of course, language, any more than a tape recorder is capable of generating language. Parrots can acquire a vocabulary, but interest has centered on the protolinguistic abilities of our closest ancestors among the apes. Apes, unfortunately, are not usually very good at mimicking human speech sounds. But if their teachers point at the appropriate symbol on a chart when speaking a word, the ape can “speak” the word later by merely pointing at its symbol. The same symbol-board technique is widely used with autistic children, so they can eventually manage to convey the meaning they associate with the symbol, just by pointing. Relatively few apes have been taught any type of language, and then with vocabularies (a few hundred words) that are small by human standards (typically 10,000 to 100,000 words). The good students among the bonobos (pygmy chimps) can understand novel sentences as complicated as “Go to the office and bring back the red ball,” where the test situation is novel (balls are not usually found in the office) and has many opportunities for error (numerous balls, some red, are in plain sight in the same room). They do this about as well as a two-year-old child, although they (and such a child) may not construct such sentences on their own. The sentences they do construct are usually within the realm of protolanguage, rather like that of the tongue-tied tourist with a similarly small vocabulary, or the Broca’s aphasic. While many such sentences are “Give me” requests, a bonobo will occasionally construct a request such as “Sue chase Rose” (watching a chase scene is preferred entertainment for young bonobos, almost as good as being chased themselves), which does not involve the bonobo itself as either subject or object of the verb. But while some animals respond impressively to commands, they (and young children) are not known for being able to answer free-form questions (not even “Name three kinds of fruit”) or to converse about the weather. This may change as more infant apes are reared to use symbolic languages from an early age, learning from skilled preschool language teachers. The abilities of the bonobos, in particular, seem quite promising. Yet almost nothing is known about the brain organization underlying language in such apes. Most observations on language still must be made in humans. “And only after strokes, I suppose?” Neil had asked. Until recently, most of our understanding of the human brain organization for language depended on the accidents of nature rather than on carefully designed scientific experiments. Both are useful. It is like the difference between a natural history museum and a science museum: one shows you the varied experiments of nature that have survived, while the other shows you what makes them tick. Understanding the mechanisms may someday provide workarounds for the disabled, speed everyone’s learning, even increase the versatility of our language-based reasoning about the complexities of the everyday world. For a long time, the natural history museum of aphasia and dyslexia was all we had. All types of language disorders involve some difficulty in naming objects. That is why object naming is used to screen brain areas for their role in language during Neil’s operation. Occasionally, anomic aphasia — finding the right name — is the only problem after a stroke. But usually the patient’s problems are more specific than simple, everyday anomia; sometimes the difficult words are a particular class of words, giving us some insight into how language is organized in the brain. In the years since Broca, researchers have noticed that patients with stroke damage to Broca’s area utter mostly nouns. When they do utter word phrases, they tend to omit the verb endings, most pronouns, and the conjunctions. Talking about a movie, such a patient said, “Ah, policeman... Ah... I know! Cashier!... Money!... Ah! Cigarettes... I know... this... beer... mustache....” They also have difficulty mimicking sequences of movements that are modeled for them involving simple movements of the tongue and mouth. Sometimes they can sing words that they cannot speak. They are very aware of their problems. And, violating the expressive-receptive, front-back dichotomy, they also have some problems understanding what others say. They especially have trouble with the words that reveal sentence structure, such as conjunctions and prepositions. But their understanding of other types of words is often intact. Patients with damage to Wernicke’s area usually have reasonable sentence construction but often misuse words. They may substitute a word that, by either sound or meaning, is related to the correct one. They seem unaware of their problem. And they often talk at some length: an aphasic patient named Blanche, when asked her name, replied, “Yes, it’s not Mount Everest, Mont Blanc, blancmange, or almonds put in water.... You know. You be clever and tell me!” There are many other kinds of aphasia. Given the Broca-Wernicke dichotomy that established the framework, it is perhaps natural that these variants were ascribed to some combination or interconnection, such as damage to the pathways between the frontal and parietal language areas. Some aphasics can repeat back a sentence containing words they find hard to use when constructing a sentence themselves. Such transcortical aphasia also has a converse, called conduction aphasia, in which the words can be used spontaneously but the patient has difficulty when asked to repeat back a sentence. Surprisingly, the language problems produced by other forms of damage do not necessarily follow these principles derived from stroke patients. Wernicke’s aphasia is rare with head injuries or tumors, even when Wernicke’s area is damaged. This has led to the suggestion that Wernicke’s aphasia is a feature of a special population of patients, elderly individuals prone to strokes who may have, in addition to the local injury from the stroke, more widespread brain damage from age or chronic disease of the brain’s blood vessels. The symptoms depend on the baseline from which you start.  [FIGURE 18 Mentally rehearsing movements in the supplementary motor area....] “Well,” Neil once said, “At least brain damage isn’t the only way to find out if a region of the cerebral cortex has something to do with language. Now you’ve got all the fancy techniques to try out on me.” For example, injecting a short-acting anesthetic into the left carotid artery that supplies the left side of the brain, and then later into the right carotid that supplies the right side of the brain, can demonstrate whether language is housed in the left or the right brain. A failure of naming ability during the several minutes when one side is asleep suggests that it is the side where language lives. Neil was given this test as part of his evaluation prior to surgery, establishing his left brain dominance for language. Sometimes catheters can be threaded into the smaller arteries of the brain, and the drug squirted out to temporarily block smaller cortical areas. The most localized blocking method, the electrical stimulation mapping that is part of Neil’s operation, requires some neurosurgery first but can localize functions to areas about the size of a pencil eraser. The applied electric current does not damage the brain. If I touch the handheld stimulator to the back of my hand, I feel a slight tingle. The current just confuses things, reversibly blocking functions such as language. And this allows a wide range of traditional experimental designs developed from studying nonlanguage functions in animal brains to be imported to the study of language in humans. But, for ethical reasons, such high-resolution methods that temporarily manipulate brain regions can be used only on those persons already undergoing neurosurgery for their own benefit and volunteering their time. There are also survey methods for producing images that show how hard the brain is working. Since they can operate through the intact skull, they are often suitable for use in normal volunteers for hours at a time. One new technique measures magnetic fields and has recently revealed a wave of activity that regularly sweeps the brain from front to back. Other important methods measure regional changes in blood flow within the brain. When nerve cells become very active, they increase the blood flow to their local region of brain, and such activity measurements can be combined with clever selection of tasks to yield images of what’s where.  [FIGURE 19 Cortical blood flow changes when seeing words, hearing words] Using positron emission tomography (PET) imaging, the localities are identified by a radioactive tracer. There is an even newer technique with better spatial resolution, in which groups of cells about one millimeter apart can be distinguished. In functional magnetic resonance imaging (sometimes called “fast MRI” or FMRI), the change in the amount of oxygen in the blood can be determined by changes in tissue resonance in a magnetic field, and a map made of where such changes occur. When subjects speak the same word repeatedly, different regions of the brain “light up” than when they mentally rehearse the same word without speaking aloud. The fundamental technique is one of looking at differences in blood flow between one state of the brain and another. For each subject, there is a measurement of resting blood flow when the subject is doing nothing more than looking at a small cross in the middle of a video display. Then a word is shown (but the subject makes no verbal response), and a map is made of the blood flow during that condition. Then the first image is subtracted from the second to show the regions of additional activity during reading. In a third condition, the subject might read the word aloud; the image from silent reading is then subtracted to show what vocalization adds in the way of neural activity. In a fourth condition, the subject might be asked not to read the word aloud but to instead speak a verb that matches the noun on the screen, such as saying “ride” when the word bike is presented. The third condition is then subtracted, to emphasize what verb-finding adds to the blood flow changes associated with speaking a noun aloud.  [FIGURE 20 Cortical changes when speaking words, and when generating words] When nerve cells are active, they also alter the reflectance of light from the brain surface. “Before” and “after” pictures can be digitally subtracted from one another, yielding an image of which cortical sites are working harder. This “intrinsic signal” technique presently requires exposure of the brain surface and can be used only at operations like Neil’s; it is beginning to provide information on language activity localization that complements that obtained from susceptibility to disruption by electrical stimulation. The imaging techniques show where neurons are active; stimulation mapping shows where they are essential for naming. All of these kinds of information — strokes, tumors, stimulation, and activity — provide a different perspective on brain language organization. In most cases, they suggest that language extends well beyond the naming sites defined by electrical stimulation. Avoiding damage to those naming sites seems adequate to head off language deficits after neurosurgery, so there are both “essential” and “optional” language areas. An adequate understanding of how the brain generates language will need to account for the results from all techniques.  [FIGURE 21 Neil’s object-naming sites] |

|

NEIL’S NAMING SITES are not exactly what would have been predicted from the

nineteenth-century Broca-Wernicke model, even as modified by later findings from many other

stroke patients. Naming in Neil is blocked in only three areas, each smaller than a dime. One of

these areas is in the lower part of the frontal lobe, just in front of the face motor area where

Broca’s area is said to be. The other two are in the back part of the temporal lobe where

Wernicke’s area is said to be. They are separated by an extensive area where stimulation

at the same strength fails to block naming. Each of these naming areas is much smaller than the textbook Broca’s or Wernicke’s area. Each area seems to have rather sharp boundaries, for movement of the handheld stimulator by less than half a pencil eraser changes the effect from blocking to unblocking. The pattern found in Neil is the most common one seen in a series of patients undergoing such operations on the language side of the brain. This pattern of brain organization, with multiple discrete areas separated by gaps, is also commonly seen in studies of sensory and motor maps in many primates. Many different patterns of naming areas are encountered in patients with left brain dominance for language. In a few such patients, only frontal naming areas could be identified: apparently these patients have no posterior language area, although their language seems normal. In a few other patients, only temporal naming areas are present: stimulating Broca’s area simply does not disrupt naming. It is particularly difficult to be sure of the location of Wernicke’s area using the naming test. There does not seem to be any one consistent temporal-lobe site for naming in most of these patients. Some patients have temporal naming areas in the rear, others midway along the sylvian fissure. Broca’s area is a little more consistent: nearly 80 percent of patients had a naming area somewhere near Broca’s area — that is, in the part of the frontal lobe just in front of face motor cortex. In some patients, naming sites cover less than the traditional Broca’s area, while in others the anomia extended further forward or upward. This degree of variability in the location of language was unexpected. It might be a result of language areas appearing quite recently in evolution, so that they may not have yet settled into a consistent pattern across all humans. But language is not alone in this variability; sensory and motor maps in cats and monkeys have also shown considerable variability in exact cortical location.  [FIGURE 22 Women’s brains respond differently to damage ] Does the variability make any difference in performance? (That was one of Neil’s questions before the operation.) Part of the variability in human language areas seems to be associated with sex and IQ. (“Ah, it’s about time that we’re getting to the sexy part,” he said.) Most of the patients having no identifiable Wernicke’s area were female. In the lower-IQ half of the patient population, females were less likely than males to have naming sites in the parietal lobe. Similar differences in language organization between males and females have also been noted from the effects of strokes. Wernicke-area strokes in some women had less effect on language than similar strokes in men. Together these findings suggest that although most men and women have similar language organization, there is a group of women who have fewer naming sites in the rear. What this “means” is still an open question. If all this language is in the left side of the brain, what are corresponding areas of the right brain doing? The nineteenth-century English neurologist John Hughlings Jackson was the first to suggest that, just as language was located on the left, we might find visual and spatial functions in the right brain. This idea was ignored for a long time, but now it is widely accepted, even to the point of great exaggeration in the popular mind. While flipping through the reading material that I handed him for our next meeting, Neil said, “There must be a whole shelf of books claiming to tell you how to tap the right brain. And become more creative, escaping the domination of your overly logical left brain. Somehow, I don’t see any of those books on your right brain reading list!” |

Conversations with Neil's Brain:

Conversations with Neil's Brain: The Neural Nature of Thought and Language (Addison-Wesley, 1994), co-authored with my neurosurgeon colleague, George Ojemann. It's a tour of the human cerebral cortex, conducted from the operating room, and has been on the New Scientist bestseller list of science books. It is suitable for biology and cognitive neuroscience supplementary reading lists. ISBN 0-201-48337-8. | AVAILABILITY widespread (softcover, US$12).

|